What is Data Management?

A data management plan is a roadmap for how a project will handle its data. It addresses key aspects like data acquisition, management, storage, and preservation, ensuring the data are well-maintained and accessible for future use.

The effectiveness of data management hinges on its usability. Data must be properly documented, stored, and shared to be valuable to researchers, policymakers, and growers. Without proper management, the investment in data collection and analysis can be rendered useless.

The guidelines detailed in this DMP help us achieve our data-driven goals, while also optimizing the value of the data by supporting information sharing and innovation. Our data management policies aim to implement FAIR (Findable, Accessible, Interoperable, Reusable) principles while also maintaining data privacy (Wilkinson et al. 2016).

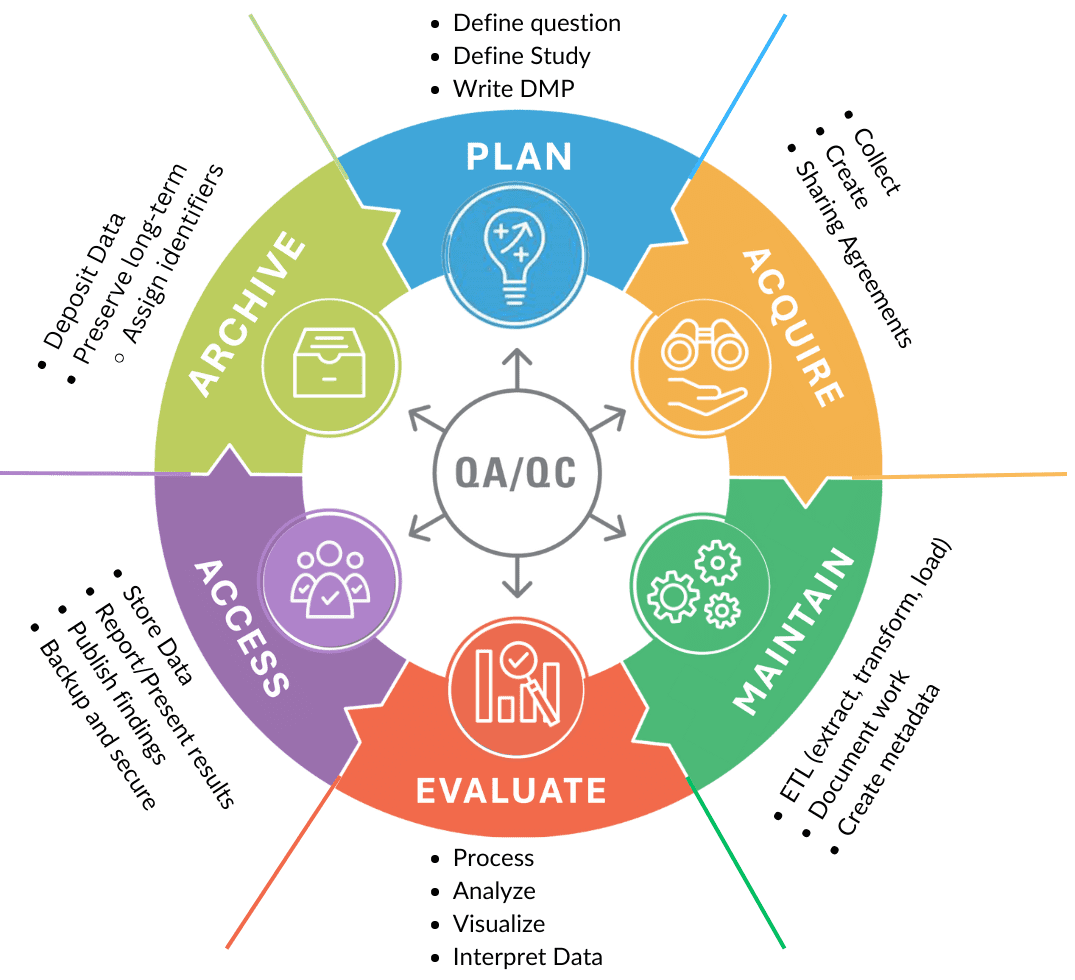

Data Life Cycle

This graphic explains the data life cycle (U.S. Fish & Wildlife Service 2023), in which each step requires care to ensure transparency, quality, and integrity.

Our adaptation is outlined below and the following chapters detail our internal processes and standards to follow throughout each step in the data life cycle.

Plan

Once you have a research question, it’s good to start envisioning your research design, the location of your project/experiment, treatments, and the data you will be collecting.

Planning includes decisions about: 1)Collection Methods and Acquisition Source, 2)Data Processing and Workflows, 3)Quality Assurance and Quality Control, 4)Formats and Units, 5)Data History, 6)Metadata, 7)Backup and Security, 8)Access and Sharing, 9)Repository, and 10)Archive.

Special projects that deviate from our standard operating procedures require additional planning.

Evaluate

We evaluate data while processing and analyzing it to maximize accuracy and productivity, while minimizing costs associated with errors or tedious data cleaning labor. Evaluation workflows should be efficient, well-documented, and reproducible. Our evaluated data help us better understand how environmental factors and management decisions impact soil health.

Acces

Access refers to data storage, publication, and security. Raw and processed data with accompanying metadata should be stored, backed up, and available for information sharing with our partners. With PI approval, anonymized and aggregated data that does not compromise growers’ personally identifiable information can be made publicly available in a data repository or data product/decision-support tool.

Archive

Properly archiving our results supports the long-term storage and usefulness of our data.

It is important to protect data from accidental data loss, corruption, and unauthorized access. This includes routinely making additional copies of data files or databases that can be used to restore the original data or for recovery of earlier instances of the data. Backups protect against human errors, hardware failure, virus attacks, power failure, and natural disasters.

Quality Assurance / Quality Control (QA/QC)

Data quality management prevents data defects that hinder our ability to apply data towards our science-based conservation efforts. Defects include incorrectly entered data, invalid data, and missing or lost data. QA/QC processes should be incorporated in every element of the data life cycle.